The Importance of Crawling in SEO

Search Engine Optimization (SEO) is a crucial aspect of any digital marketing strategy. It involves various techniques to improve a website’s visibility and ranking on search engine results pages. One fundamental process in SEO is crawling.

What is Crawling?

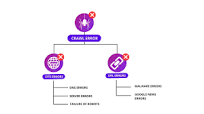

Crawling is the process by which search engine bots, also known as spiders or crawlers, systematically browse the internet to discover and index web pages. These bots follow links from one page to another and gather information about the content and structure of each page they visit.

Why is Crawling Important for SEO?

Effective crawling is essential for ensuring that search engines can find, understand, and rank your website’s content accurately. Here are some reasons why crawling plays a critical role in SEO:

- Indexing: Crawling allows search engines to index your web pages, making them eligible to appear in search results.

- Discoverability: Through crawling, search engines can discover new content on your site and update existing content in their index.

- Crawl Budget: Search engines allocate a crawl budget to each website, determining how frequently and deeply they crawl its pages. Optimizing your site for efficient crawling can help maximize your crawl budget.

- Identifying Issues: Crawling helps identify technical issues such as broken links, duplicate content, or missing meta tags that may impact your site’s SEO performance.

- Improving Rankings: A well-crawled website with high-quality content is more likely to rank higher in search results, driving organic traffic and visibility.

Crawling Best Practices

To ensure that your website is effectively crawled by search engines, consider implementing the following best practices:

- Create a Sitemap: Submitting a sitemap to search engines helps them understand the structure of your site and prioritize crawling important pages.

- Optimize Robots.txt: Use a robots.txt file to control which pages search engines can crawl and index on your site.

- Internal Linking: Implement a logical internal linking structure to guide crawlers through your site and ensure all pages are discoverable.

- Avoid Duplicate Content: Prevent duplicate content issues that can confuse crawlers by using canonical tags or 301 redirects where necessary.

In conclusion, crawling is an indispensable component of SEO that directly impacts how well your website performs in search engine rankings. By understanding the importance of crawling and implementing best practices to optimize it, you can enhance your site’s visibility, attract more organic traffic, and achieve better SEO results overall.

5 Essential Tips to Enhance SEO Crawling for Your Website

- Ensure your website has a clear and organized site structure for easy crawling by search engines.

- Use descriptive and relevant meta tags, including title tags and meta descriptions, to help search engines understand your content.

- Create an XML sitemap to provide search engines with a roadmap of your website’s pages for efficient crawling.

- Optimize your website’s loading speed to improve crawlability and user experience.

- Regularly monitor and fix crawl errors reported in Google Search Console to ensure search engines can access your content.

Ensure your website has a clear and organized site structure for easy crawling by search engines.

Ensuring that your website maintains a clear and organized site structure is a fundamental tip in optimizing crawling for SEO purposes. A well-structured site not only enhances user experience but also facilitates search engine bots in navigating and indexing your content effectively. By organizing your website with logical categories, hierarchical menus, and internal linking, you make it easier for search engines to crawl and understand the relevance of each page, ultimately improving your site’s visibility and ranking in search results.

Use descriptive and relevant meta tags, including title tags and meta descriptions, to help search engines understand your content.

Utilizing descriptive and relevant meta tags, such as title tags and meta descriptions, is a crucial tip for optimizing crawling in SEO. These tags provide concise summaries of your web pages’ content, helping search engines better comprehend the relevance and context of your site. By incorporating targeted keywords and compelling descriptions within your meta tags, you enhance the chances of search engines accurately indexing and displaying your pages in search results. This practice not only improves visibility but also encourages higher click-through rates from users seeking content aligned with their search queries.

Create an XML sitemap to provide search engines with a roadmap of your website’s pages for efficient crawling.

Creating an XML sitemap is a valuable tip in the realm of SEO crawling. By generating this structured file, website owners can offer search engines a clear roadmap of their site’s pages, facilitating efficient crawling and indexing. This proactive approach not only helps search engine bots discover and prioritize important content but also ensures that all relevant pages are properly indexed, ultimately enhancing the overall visibility and accessibility of the website in search results.

Optimize your website’s loading speed to improve crawlability and user experience.

Optimizing your website’s loading speed is a crucial tip for enhancing both crawlability and user experience. Search engine bots prioritize websites that load quickly, as faster loading times make it easier for them to crawl and index your content efficiently. Additionally, a fast-loading website improves the overall user experience, reducing bounce rates and increasing engagement. By focusing on improving your site’s loading speed, you not only make it more search engine-friendly but also create a more satisfying experience for your visitors.

Regularly monitor and fix crawl errors reported in Google Search Console to ensure search engines can access your content.

Regularly monitoring and addressing crawl errors reported in Google Search Console is a crucial step in maintaining the accessibility of your website’s content to search engines. By promptly fixing these errors, such as broken links or server issues, you ensure that search engine bots can effectively crawl and index your web pages. This proactive approach not only helps in improving your site’s overall SEO performance but also enhances its visibility and ranking potential in search results.